Thinking Fast and Slopes

In a networked economy, overestimating low-probability events is not irrational — it's optimal.

I begin the article with a summary of it, courtesy of ChatGPT:

In a rapidly changing and interconnected world, traditional decision-making theories may no longer fully encompass the complexities of the modern economy. The rise of non-linear dynamics, power law distributions, and extreme outcomes has led to an environment where risk-taking and adaptability are increasingly crucial. In this article, we will explore the limitations of prospect theory, introduced by Daniel Kahneman in his book "Thinking, Fast and Slow," and discuss how it may not be entirely applicable to our current economic landscape. We will also consider how embracing both caution and boldness can enable individuals, companies, and societies to navigate this new era of uncertainty and capitalize on the opportunities it presents.

Winning starts with knowing what game you're playing. When it comes to the economy, the game is constantly changing, but it usually remains in the same, well, ballpark. But every few decades or centuries, the game changes completely. And when it does, following the advice of the previous era becomes a recipe for disaster.

In 2011, Daniel Kahneman published a book called Thinking, Fast and Slow, which introduced "prospect theory" — a psychological explanation for how people make decisions, especially when it comes to risks and uncertainties. The idea is that people tend to make choices based on how they think they'll feel about the potential gains and losses rather than on the actual probabilities of those outcomes.

A core tenet of prospect theory is the notion that people tend to overestimate low-probability events. For example, the theory suggests that spending on insurance is influenced by loss aversion and the overestimation of low-probability events, leading people to seek protection against potential losses and rare events, even at a higher cost.

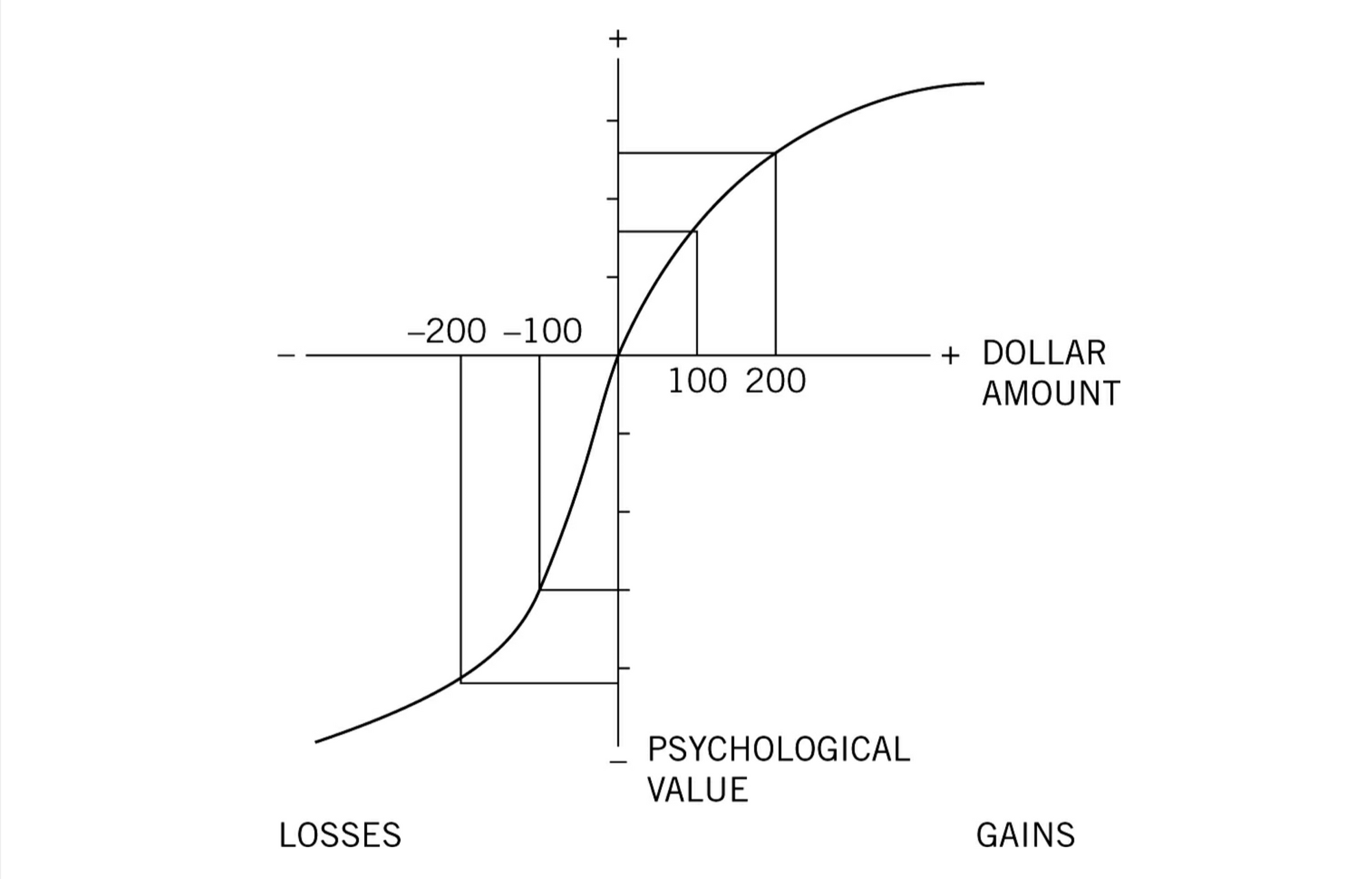

By "loss aversion," Kahneman refers to his theory on how people decide when to gamble and when to stick to a guaranteed outcome. He points out that humans tend to be more cautious when they stand to gain something and more willing to take risks when trying to avoid or minimize losses.

Kahneman sees this asymmetry as a bias driven by emotions rather than a cold calculation. Or, as he would put it, driven by fast, intuitive-emotional thinking (System 1) rather than slow, systematic-rational thinking (System 2). Kahneman argues that humans tend to experience the pain of losses more intensely than the pleasure of equivalent gains, making them more risk-averse in the domain of gains and risk-seeking in the domain of losses. He illustrates this point in the following chart. As you can see, the psychological effect of losing $100 is twice as intense as the effect of winning $100.

According to prospect theory, the overestimation of low-probability events can also be observed when people seek gains. Gamblers often overestimate the likelihood of winning a rare, high-value prize, even though the actual probability of winning is very low. This overestimation of low-probability events can encourage individuals to make bets that seem irrational when one considers the odds and the gap between expected value and costs.

The book mentions other biases that interact to produce various behaviors that are not "reality-bound." And indeed, on paper, many human behaviors are clearly irrational and inconsistent. But things get more complicated when you apply the theory to real-life situations. If you take a risk and die, you can't take any more risks, and the game is over. If you avoid risk and survive, you can always gain something else tomorrow. Likewise, if your capital is finite and you lose all of it on one bet, you're out of the game. But if you only bet some of it and gained less than you could have, you can always make another bet tomorrow.

And in many situations, we have limited information on the costs and consequences of our actions. We're not dealing with the choice between a "sure thing" and a "gamble"; we're dealing with two different gambles with unknown consequences. Hence, we are forced to gamble whether we like it or not, so it makes more sense to make bets that have a higher potential upside — within reason.

The book itself is more nuanced and describes how different biases and tendencies moderate each other's effect. It also makes the implicit assumption that humans operate in a world in which outcomes are normally distributed and consequences are known.

What struck me about prospect theory is not its weaknesses but how it declines in value the farther we move from the old industrial world. This happens for two reasons. The first reason is that outcomes are becoming harder to predict. And the second is that fewer outcomes are normally distributed.

Let's unpack these two sentences. From an entrepreneur's perspective, the world was more predictable 50 years ago. As I pointed out in Productivity and Inequality:

In the industrial world of manual labor, output is a function of input: More work results in higher production. An increase in productivity affects the amount of output for a given unit of output, but it doesn't change the basic equation. And so, as the productivity of manual laborers increased, so did their pay — because they could now deliver more goods per hour.

[But] In knowledge work, the input-output relationship is not linear. More input does not necessarily produce more output; sometimes, it produces nothing. Let's assume three different companies are trying to develop a new app, advertising campaign, or exotic financial product. The number of people their "throw" at the project is not the key factor in determining whether the project succeeds (or even gets built at all).

Our economy is increasingly dependent on nonlinear dynamics, which makes it much harder to predict the outcomes of different actions. Kahneman acknowledges as much in a recent interview. Asked whether we can expect the range of human prediction to diminish, he says:

That's a very interesting question... there seems to be a lot of evidence that, at least in the domain of technology, change is exponential. So it's becoming more and more rapid. It's clear that as things are becoming more and more rapid, the ability to look forward and to make predictions about what's going to happen diminishes. I mean, there are certain kinds of problems where you can be pretty sure there is progress and you can extrapolate. But, in more complex prediction questions, at a high rate of change, you really have no business, I think, forecasting.

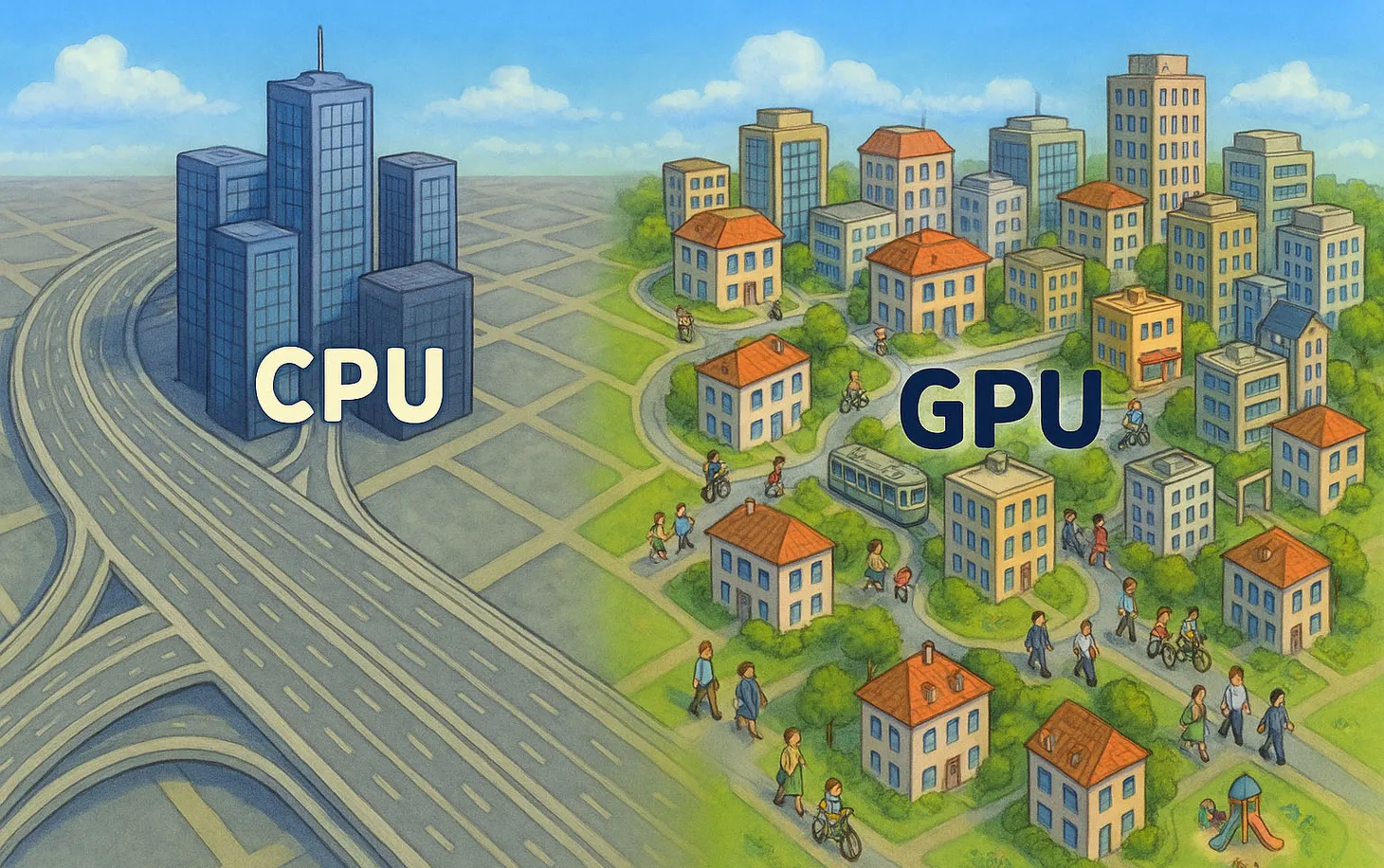

This brings us to the second point. The industrial world was characterized by scarcity, silos, and constraints. The key to success was controlling supply rather than controlling demand. Output was a linear function of input — the more capital, labor, and material you put in, the more widgets you got to produce and sell. Work was typified by large groups of equally-trained employees doing relatively identical tasks and earning more or less the same. And leisure was typified by masses of relatively-equally-paid people buying mass-produced goods that were marketed through a handful of mass-media channels.

I dive deeper into how and why this happened here. But for our purposes, the point is normal distributions were endemic to the industrial world of scarcity and constraints. Things progressed relatively slowly. It was hard for any single firm or person to get too far ahead and too far behind. And yes, there were giant firms and wealthy people, but the gaps between winners and losers were smaller, and the middle was deep and wide.

Now consider our own economy. It is characterized by abundance, interconnection, and lack of constraints. In most industries, the key to success is controlling demand (getting attention) rather than controlling any specific resources or factors. Output is non-linear — a single person with a computer can outgun a giant company and produce a billion-dollar app or a billion-views video. Increasingly, work is typified by diverse groups of people working in a variety of places and styles without rigid hierarchies. And leisure is typified by personalized and niche products or hits that become irrelevant almost as soon as they surface.

We live in a networked world where power law distributions are popping up everywhere. The distribution of income is far from normal. The gaps between winners and losers are massive. The giant firms are larger than ever (in terms of market cap), while some of their competitors are as small as a soccer team. It is a world of extreme outcomes and extreme uncertainty.

The old "rational" approach does not apply in such a world. Even Kahneman acknowledged as much in a recent interview. Joseph Walker asked him to square prospect theory with Nassim Nicholas Taleb's idea of "Black Swans." Black Swans refer to "tail risks" — events with low probability but colossal impact. Here's the relevant excerpt:

WALKER: So, as you know, Nassim Taleb argues that we underestimate tail risks. Does that contradict prospect theory?

KAHNEMAN: Well, no, I would say. In prospect theory, you overweight low probabilities, which is one way of compensating. Now, what Nassim says, and correctly, is, “You can't tell — you really cannot estimate those tail probabilities.” And in general, it will turn out — it's not so much the probabilities, it's the consequences. The product of the probabilities and consequences turn out to be huge with tail events.

Prospect theory doesn't deal with those — with uncertainty about the outcomes. So what Nassim describes, as I understand it, is you get those huge outcomes occasionally, very rarely, and they make an enormous difference. This is defined out of existence when you deal with prospect theory, which has specific probabilities and so forth. So prospect theory is not a realistic description of how one would think in Nassim Taleb’s world. Certainly not a description of how one should think in Nassim Taleb’s world.

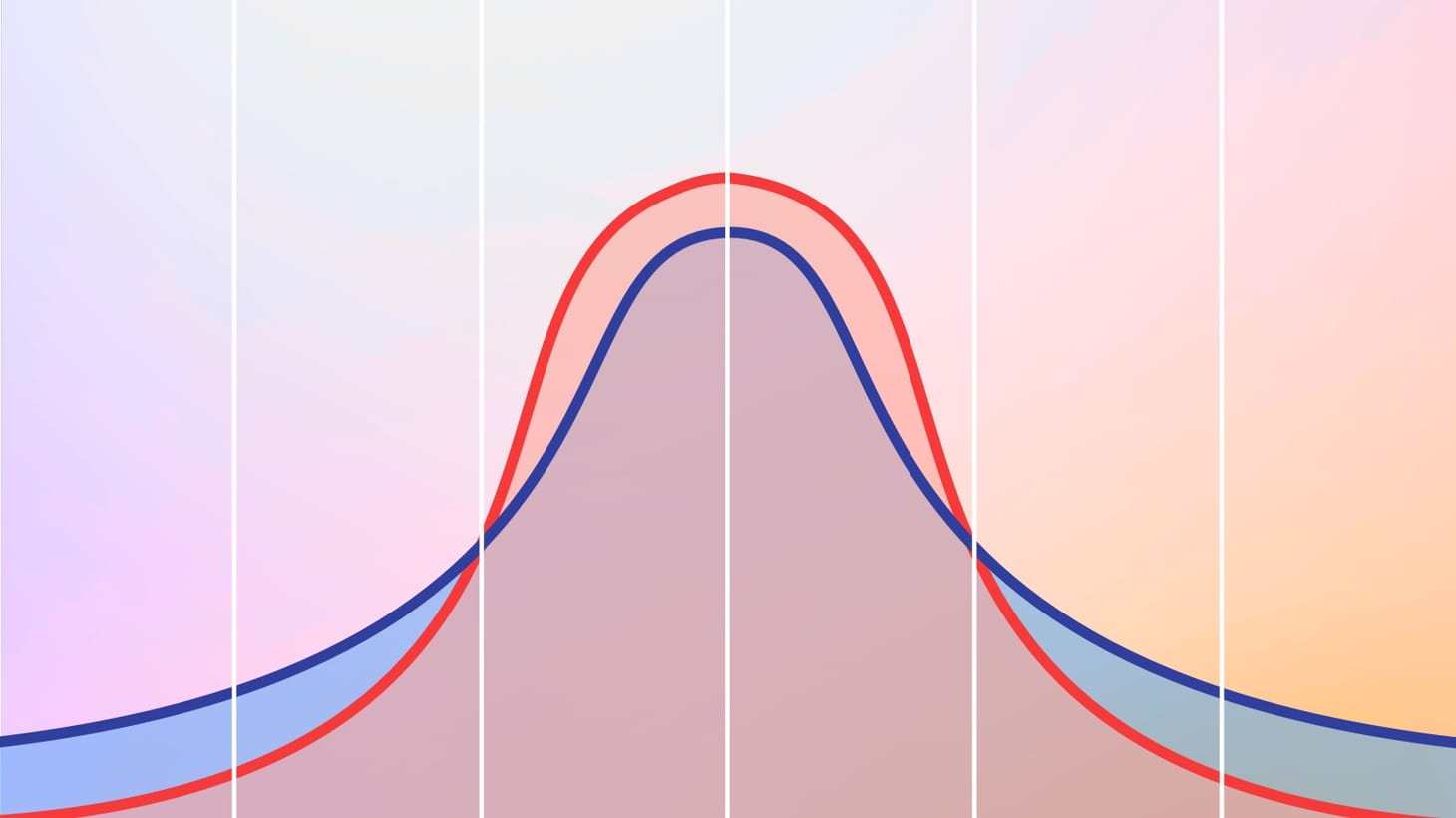

Taleb celebrated Kahneman's acknowledgment that there is no "bias" in paying too much attention to events at the fat tails of the distribution — as opposed to the middle, where everyone was traditionally instructed to focus.

Huge.

— Nassim Nicholas Taleb (@nntaleb) April 15, 2023

Daniel Kahneman acknowledging publicly that Prospect Theory does not work under fat tails (or unknown probabilities), hence there is no "bias" in these circumstances. https://t.co/ix8pwXbEsN

We live in a world where low-probability outcomes play a growing role. A silly video can go viral within days and capture a massive audience. Within weeks, the most dominant companies can lose significant market share to a new app. In such a world, overestimating low-probability events is not irrational — it's optimal. If you don't prepare for crazy outcomes or make crazy bets, you're almost guaranteed to lose out.

This is true for individuals and companies, and it is true for whole countries and societies. We need to push into the tails of the distribution in both directions: Brace ourselves for unimaginable turbulence and encourage bold, crazy, optimistic bets.

In practice, this means developing new safety nets and systems that enable us to live with dignity, regardless of whether we win or lose. And it also means investing in innovation and making bolder bets on new ideas and technologies. Over-caution and over-confidence should not be at odds. We need to build a world where everyone can experiment without the fear of going hungry or homeless — because one of those experiments will make all the others worthwhile.

Best,

🎤 How will AI reshape our cities, companies, and careers? My speaking schedule for the year is filling up. Visit my speaker profile and get in touch to learn more.

Dror Poleg Newsletter

Join the newsletter to receive the latest updates in your inbox.