AI and the Long Tail

AI can reduce inequality and polarization by making the internet less social.

The internet breeds inequality. A small number of websites receive the most visits. A few people receive most of the views on YouTube and TikTok. A small number of podcasts and newsletters attract most of the subscribers.

This inequality is inherent to networks. The more extensive the network, the larger its hubs tend to be. Large hubs are efficient — they make it easier to connect the highest number of nodes. This is apparent when you look at an airline map. A super-connected hub like Chicago, London, or Singapore allows passengers to fly from anywhere to anywhere with a single connecting flight.

Online social networks are even less equal than airline networks. Partly because they are larger (there are more people online than airports offline). And partly because they are social. The term "social network" describes platforms like Facebook or WeChat. But in reality, the whole web is a social network made of pages and posts generated by individual people.

The tools we use to explore the web are also social. And their social nature contributes to (or causes) massive inequality.

How do we decide where to go and what to pay attention to online? We actively use search engines, or we passively consume content on feeds. In both cases, we rely on systems that depend on social signals.

Harnessing the Crowd

In 1998, two Stanford Ph.D. candidates published a paper describing a new search engine. In The anatomy of a large-scale hypertextual Web search engine, Larry Page and Sergey Bryn introduced two important innovations. They assessed the quality or "PageRank" of each web page based on the number of other pages that linked to it. And they relied on the text of each link to determine the content of the link's target.

Under the system devised by Page and Bryn, popular pages (those with lots of incoming links) were deemed credible and important. And the topic of each page was determined based on the text in the links to it on other sites. So, if page A included a link to page B with the text "cake recipe," Bryn and Page's search would send anyone looking for "cake recipe" to Page B. In short, Bryn and Page's search engine determined the quality and relevance of each page based on the "opinion" of other pages — the number and description of incoming links.

It worked. By the end of 1998, Bryn and Page incorporated Google, and the search engine quickly asserted itself as superior to the indexes and crawlers that were popular at the time. Before Google, people navigated the web by going to curated "portals" such as Yahoo, AOL, and MSN or used primitive search engines that struggled to compete with human-curated lists.

Initially, Google's approach seemed far-fetched. In 1997, Bryn and Page were worried that their search engine project was taking too much time away from their Ph.D. studies and wanted to sell it to Excite.com, one of the world's most popular sites and search engines. They offered Excite's CEO to buy the project for $1 million, and later even as low as $750,000. Years later, Excite CEO George Bell said the deal fell through because he was unwilling to let the new technology power Excite's leading search engine.

Page and Bryn kept Google to themselves, and the rest is history. As of January 2023, Google commands 85%-90% of the global search market. As the web continued to grow, human curation became impossible, and Google's automated "social" approach provided the best way to make sense of the infinite amount of content. The same approach also inspired the algorithms that power the "feeds" of social media platforms such as Facebook, YouTube, and TikTok — people are shown content that other people like. The recommendations of people with more followers count more than those with fewer.

The social approach helped Google and others deliver relevant content at scale. But it came at a cost.

Stunted Democratization

In 2004, Chris Anderson published The Long Tail, an article in Wired magazine that described how the internet would transform how we produce and consume content. The article was later expanded to an entire book. Anderson identified three forces that are reshaping the world of content:

- The democratization of production: Computers, smartphones, and digital cameras enable anyone to create content cheaply, anytime, anywhere.

- The democratization of distribution: The internet enables content to be sent anywhere, instantly, at almost no cost.

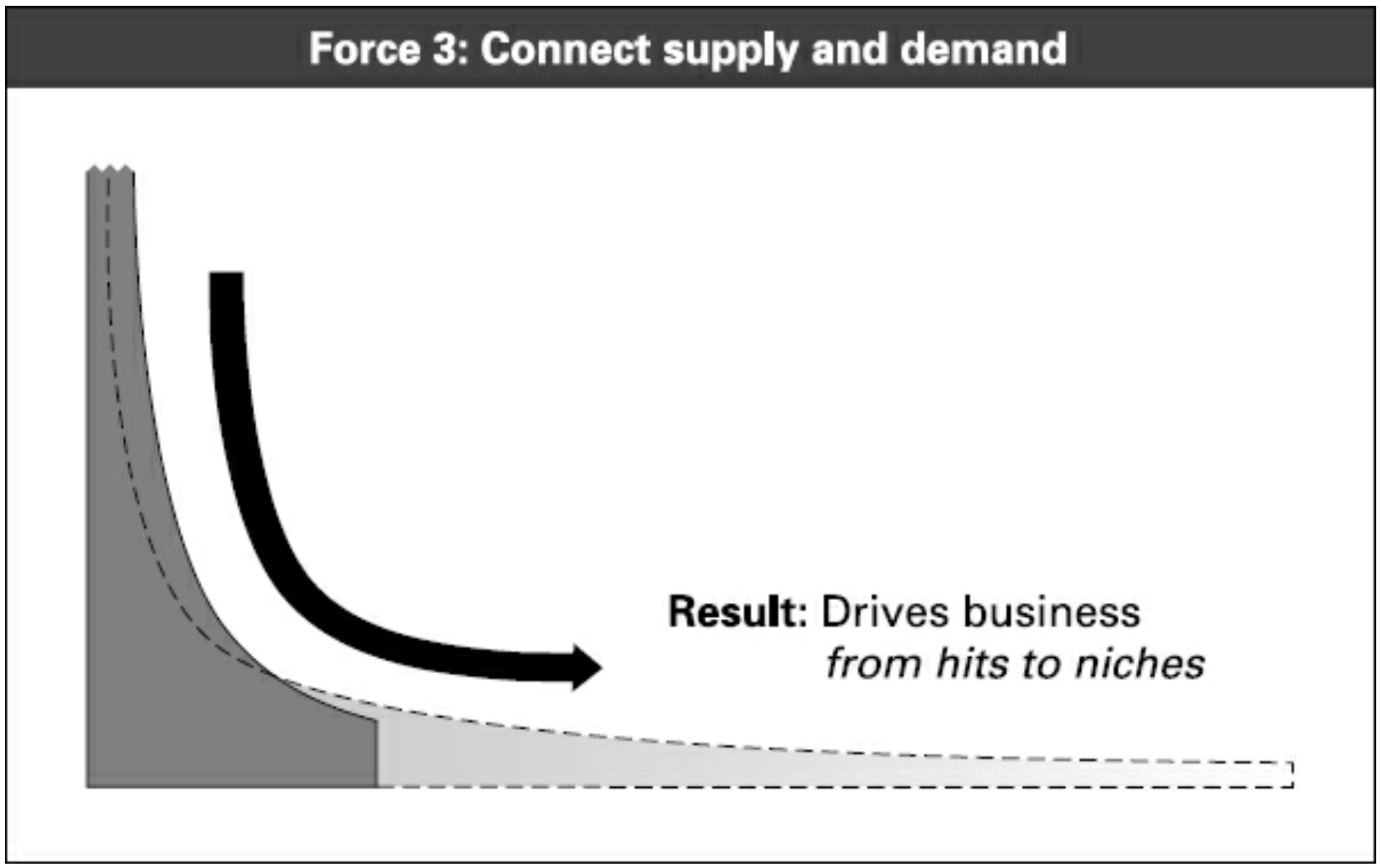

- The reduction of search costs: Software and online platforms help users find the niche content that suits their specific preferences. This, according to Anderson, happens thanks to "Google’s wisdom-of-crowds search" and "iTunes' recommendations" as well as "word-of-mouth" recommendations on "blogs" and online reviews.

Anderson predicted that these forces would drive more attention (and revenue) away from traditional hits towards a "long(er) tail" of niches and smaller publications and creators.

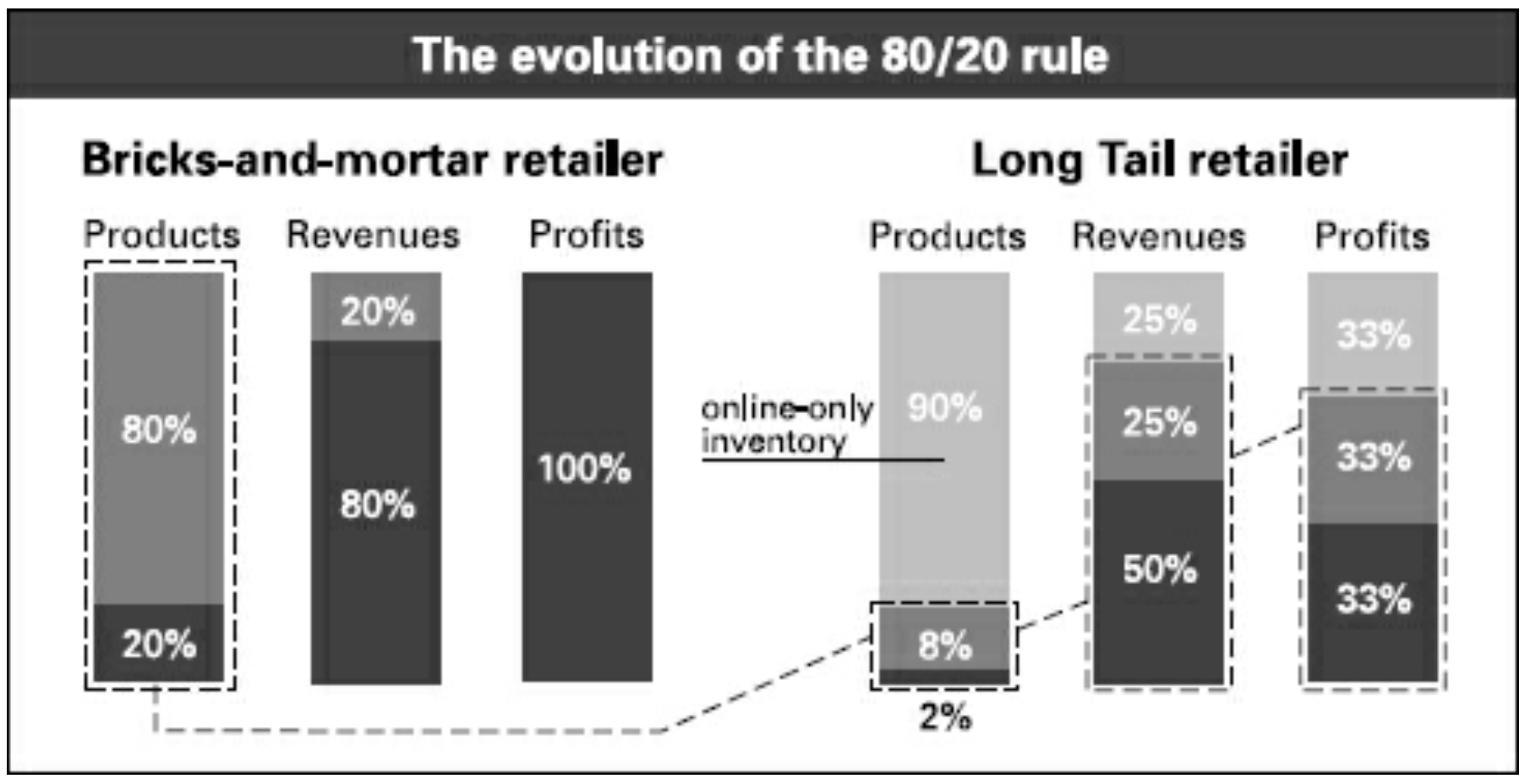

He expected the internet to bring the "death of the 80/20 rule" — where 20% of all products generate 80% of the revenue (and close to 100% of the profits).

But things turned out differently. As Spotify's former chief economist, Will Page, points out in Tarzan Economics, music listeners spend 90% of their time listening to less than 2% of all songs on streaming platforms. Cheap production, cheap distribution, and "wisdom-of-crowds" recommendations made the world less equal. Contrary to Anderson's prediction, the hits at the head of the distribution are more popular and economically dominant than ever.

To be fair to Anderson, the web did democratize opportunity: More people can now make a living from creating content, and consumers dip into many more niches and genres. But the gap between top and average performers is higher than ever. More people can earn $100,000/year from doing what they love. But a handful of people can become billionaires and earn more than any musician (or filmmaker or writer or teacher) ever did.

This inequality results from the social nature of the algorithms that power our search engines and feeds. Such algorithms drive more traffic towards whatever seems to be popular: If a few people like a post, the post is shown to more people; if a few people listen to a song, the song is recommended to more people. The richer get richer by design. Whatever's "popular" is considered adequate and worth spreading.

This dynamic also contributes to the decline in the quality of mainstream publications and political polarization. To stand out, you must stoop lower to draw attention, take a position that galvanizes a specific group of people, or both.

Perhaps this is the price of progress. We now have access to more information, music, videos, and opinions than ever. Anyone has a voice. More people than ever have an opportunity to become stars and make a living doing what they love. It's a trade-off that society might be able to live with. If there's no other option, we can learn to live with inequality, polarization, and degraded mainstream culture.

But what if there is another option?

The Anti-social Web

Microsoft is an early investor in OpenAI, the maker of ChatGPT. For those not familiar, ChatGPT is a piece of intelligent software that "interacts in a conversational way." ChatGPT can answer questions, "answer followup questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests." You can ask it to explain the "labor theory of value" or to write a cake recipe in the style of William Wordsworth.

Microsoft is contemplating a massive $10 billion round of further investment in OpenAI. The investment is not just financial; it is strategic. As Bloomberg reports:

Microsoft [is] working to add ChatGPT to its Bing search engine, seeking an edge on [Google's] dominant search offering. The bot is capable of responding to queries in a natural and humanlike manner, carrying on a conversation and answering follow-up questions, unlike the basic set of links that a Google search provides.

ChatGPT is still wildly inaccurate. But let's assume it gets better and offers a genuine alternative to Google. In theory, Bing could provide a far superior user experience: Users will ask a question and receive a straightforward answer — instead of the list of websites provided by Google. Google itself might follow suit and deploy its capabilities to make its own search engine more conversational and human-like.

That's the story everyone is focused on: A.I.'s impact on the experience of searching the web. But there's a much bigger story here — a potential revolution that can impact the distribution of human attention, income, and the overall quality of the content we consume.

To understand what might happen to search, let's imagine a world without search engines.

Those Who Know

How did we survive before Google? We relied on experts. Experts have the knowledge required to evaluate the quality, veracity, and validity of information. An economist can evaluate an economics paper. A physicist can evaluate a physics paper. An editor can evaluate the reasoning and sources employed by a writer. Even if the content deals with brand-new ideas, experts can, at least, judge the author's methodology, approach, and reputation (or know who to ask if they can't).

Before the internet, we relied on experts and avoided the inherent inequality of social recommendation systems. An expert could anoint "winners" based on various objective standards — ignoring or downplaying the role of popularity. When objective standards were unavailable, experts could at least rely on pre-agreed ones.

We can generalize and say that in the past, content became popular because it was good; today, content is considered good because it is popular. This doesn't mean that popular content isn't objectively good. But it does mean that popularity efforts to generate "engagement" overwhelm all other factors.

Popularity mattered even in the past. But those who've been around before the internet and cable T.V. know what I mean: We used to live in a world of scarcity, where expert gatekeepers decided which music, films, and articles deserved to reach a broader audience.

Those who lived before printing presses had an even better sense of that world. Indeed, the world of scarcity was unequal and unfair in other ways. Experts (and priests and monarchs) prevented most content from getting attention, and most people had no chance of ever getting heard.

But even with the best intentions, experts had to limit opportunity. They didn't intend to, but they had no choice. Before the internet, there was a physical limit on how much content could be assessed and distributed. A limited number of experts could not read, watch, and listen to everything anyone ever produced.

And even if experts could assess all the world's content, distribution was still constrained. Cinemas could only screen around 500 films each year. T.V. and radio could only fill 24 hours a day. The cost of production and distribution made it unprofitable to publish more than a limited number of newspapers, books, albums, and games. The world of scarcity was somewhat more egalitarian, but it was still far from ideal. (80/20 is better than 98/2, but it is not as good as 50/50 —where 50% of products or people get 50% of the attention and income)

That was the downside of scarcity: More quality and equality at the cost of less opportunity and dynamism. The abundance of the web turned this formula on its head: Over the past two decades, we enjoyed more opportunity and change at the cost of lower mainstream quality, higher inequality, and more polarization.

Is it possible to get the best of both worlds? It might be.

An Abundance of Expertise

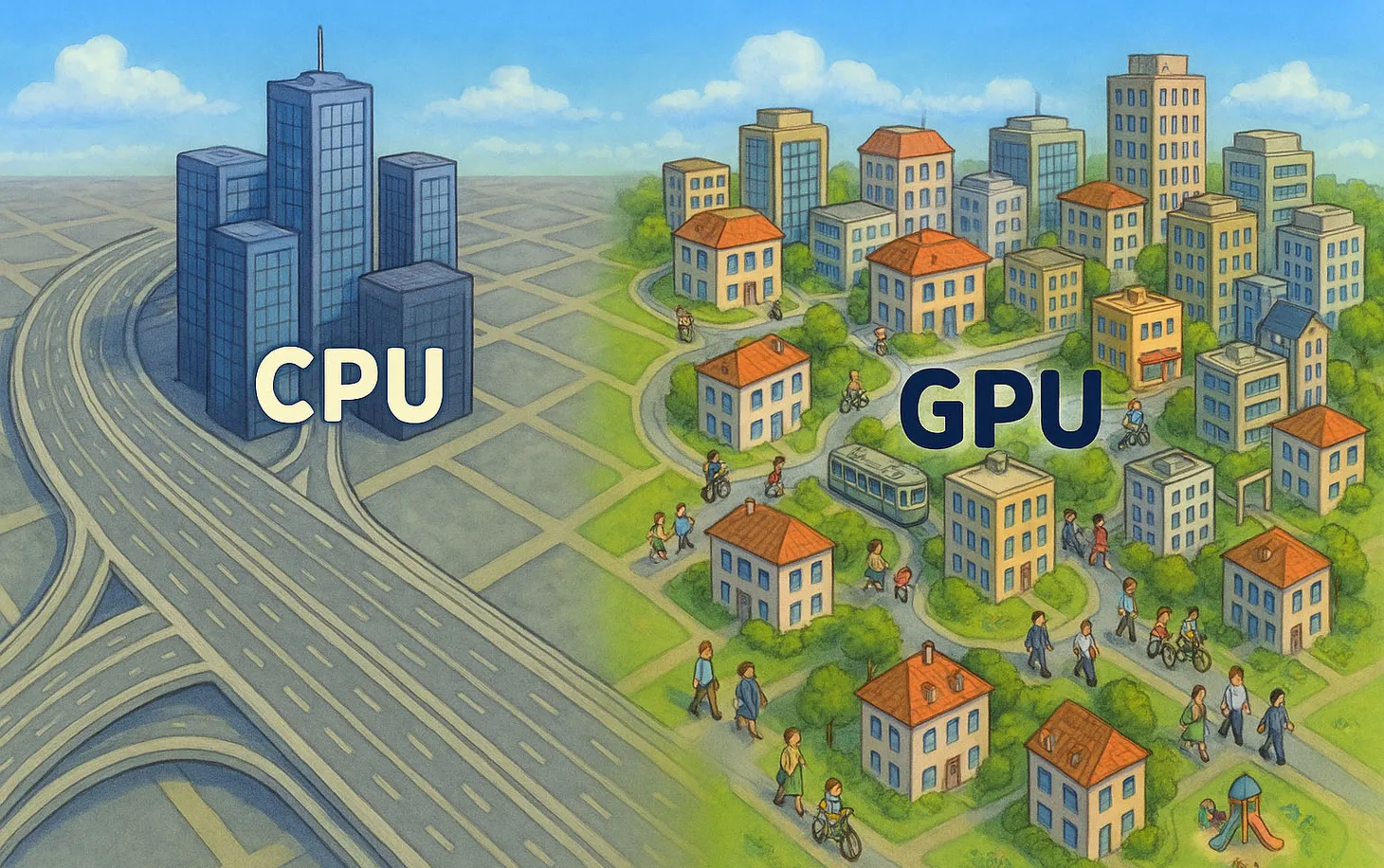

Artificial Intelligence could make expertise scalable. Instead of evaluating content the way Google would (popular = good), the next iteration of ChatGPT might be able to evaluate it the way an expert would (good = good).

Let me explain. Imagine a piece of software that actually understand what it reads. It doesn't have to do so "like a human"; an approximation would probably be enough: It can read and determine the general topic, assess the methodology it uses, look into the sources it cites (and the sources that cite it), consider the clarity and logic of the argument, and determine whether it is worthwhile. It could do the same with music, paintings, and any other form of content — see whether they are coherent, how they fit in a long line of prior works, what patterns they draw on, and more.

What I have in mind is the approach of Yahoo with the scale of Google — curate the whole web based on a systematic assessment of the quality of each page rather than an approximation based on popularity and links.

In this vision, we will combine the digital democratization of content production, the web's cost-free distribution, and a true democratization of search (not just making search cheaper as Google did, but making it genuinely scalable and intelligent). In such a world, good content will no longer depend on the constraints of physical cinemas, airwaves, and printers or on the biases of social algorithms that are swayed by crowds.

In other words: Scalable expertise could usher in a world of better content, lower inequality, lower polarization, and more opportunity for more people.

And yes, Google already considers semantic meaning when it evaluates web pages. And even the most expert A.I. will have to rely on some social signals to determine credibility. But still, a shift from "social" to "intelligent" search can significantly alter the distribution of online likes and views and limit the size and occurrence of massive winners.

Of course, automated "experts" will have their own biases and quirks. As we become more reliant on their judgment, the world will be reshaped in unintended ways. Just like Chris Anderson in 2003, we might find that early trends appear very different after twenty years. And still, what have we got to lose? And do we really have a choice?

Have a great weekend.

Dror Poleg Newsletter

Join the newsletter to receive the latest updates in your inbox.