God, AI, and the Scalable Class

Most people no longer need to work. Our survival depends on convincing them it's ok to do something else.

🎧 The audio version of this article is available on Spotify, Apple Podcasts, and beyond.

This week I felt like I had to stop and rethink. The latest advances in artificial intelligence forced me to question everything I think about the future of work. I've written extensively about some of the weirder possibilities for that future. But they seem less weird now. They seem like they might be very relevant within five years.

Yesterday, I shared with you a conversation I had with ChatGPT. If you haven't tried ChatGPT yet, please do. It's magical. It doesn't just answer questions but also "understands" complex instructions and explains their reasoning. As the conversation progresses, it remains "conscious" of its previous answers and my previous preferences and uses this knowledge to provide better and more engaging answers. And it's even better at coding. I can ask it how to build something, and it would provide the relevant code and tell me what to install and what other "ingredients" are needed to turn my idea into a functioning product.

And now, ChatGPT is getting integrated with other apps and services. This would enable it to use its understanding to do things in the real world — book flights on Expedia, look up information on Google Drive, email contracts to job applicants, and more.

The performance of ChatGPT and the "engine" that runs it is incredible. And even more astonishing is the rate of improvement compared to previous versions from mere months ago. It is starting to change our most basic assumptions about the meaning of "thinking" and "intelligence." At its core, ChatGPT is a statistical engine that identifies patterns in human content and guesses which word is most likely to follow another word. Technically, it doesn't understand what it says; it only predicts what the most appropriate thing to say in the context of what was already said.

But that's where things get weird. The latest version shows signs of understanding. Consider this comparison between version 3.5 and the current version 4.0 of ChatGPT. Daniel Feldman asked the following question:

I'm in my house. On top of my chair in the living room is a coffee cup. Inside the coffee cup is a thimble. Inside the thimble is a single diamond. I move the chair to my bedroom. Then I put the coffee cup on the bed. Then I turn the cup upside down. Then I return it to rightside-up, and place the coffee cup on the kitchen counter. Where is my diamond?

The earlier version of ChatGPT (3.5) responded:

Based on the actions you described, the diamond should still be inside the thimble, which is inside the coffee cup on the kitchen counter. Moving the chair to the bedroom and turning the cup upside down would not have affected the diamond's location inside the thimble. Therefore, the diamond should still be inside the coffee cup, which is now on the kitchen counter.

As you can see, it's a typical "machine" answer — considering all the steps in order, relying only on the information it was provided, and concluding that the diamond is still inside the coffee cup, albeit now on the kitchen counter instead of the chair.

The current public version of ChatGPT (4) had a different answer:

When you turned the coffee cup upside down on your bed, the diamond inside the thimble would have likely fallen out onto the bed. The diamond is now on your bed.

This answer implies that ChatGPT is not simply relying on the data provided in the question. Instead, it also relies on what researchers call "a world model" — an abstract representation of the spatial and temporal aspects in which the story in the questions unfolds.

Of course, ChatGPT also gets many things wrong. And the above might be a coincidence in which the answer implies an intelligence that is not really there. But these types of coincidences are increasingly common. And, of course, humans make lots of mistakes. Even when humans get things right, they tend to suffer from impostor syndrome and assume they can't consistently replicate their achievements.

This brings us to the realization that "understanding" might mean "predicting what the most appropriate thing to say in the context of what was already said." Maybe that's what humans have been doing all along. The conscious rationalization of this process and the stories we tell ourselves about how we think we think might serve some purpose, but they do not describe how we actually think.

In any case, it doesn't matter. A machine that can reason like a human in a variety of situations is a groundbreaking development. What does it mean for the rest of us?

As we look for an answer, let's take a detour to the pages of The New York Times.

Go Back to Work

Steven Rattner, a former investment banker, journalist, and Obama official, wrote an op-ed wondering whether "working from home [is] really working?." He starts with a description of what seems to him like a growing reluctance to work:

Whatever you want to call it, the attitude of many Americans toward work appears to have changed during the long pandemic — and, generally speaking, not for the better. This new approach threatens to do long-lasting damage to economic growth and prosperity.

Until Covid, most employed Americans had workdays that followed a decades-old pattern: Wake up, shower, breakfast, commute, spend at least eight hours in an office or a factory, commute home and maybe enjoy a glass of wine or a beer. Rinse and repeat, every Monday through Friday.

Just a fact of life for most, drudgery for many and enjoyment for a few, most often those closer to the pinnacle of responsibility and compensation.

A recent Wall Street Journal report noted that in a Qualtrics survey of more than 3,000 workers and managers, 38 percent said the importance of work to them had diminished during Covid and 25 percent said it had increased. (The rest said that it had not changed.)

More precisely, Rattner describes a growing reluctance to care about work. He sees this as a problem and assumes Americans should go back to the office and work more — or pay the price:

The changing work habits have spawned a push for a codification of what may already be a reality: a four-day workweek. Legislation to that effect has been introduced in California, Maryland and other states. Proponents argue that with an extra day of rest, diligent workers can accomplish as much as they did in five days. Perhaps. But put me down as skeptical about that and much of the notion that when it comes to work, less can be more.

Rattner may be right. But I think we're in the midst of a more profound change. People want to do other things with their time because the economy needs them to do other things. We simply no longer need so many people to do what we previously considered to be "work." Instead, we need to do two other things:

- Consume. But in a way that generates signal and input that can guide further production (and consumption); and

- Do whatever they want and, somehow, uncover new jobs that will become important.

I'll expand on the first point in a moment. The second point might sound strange, but if you think about it, many of the services and products we consume would have seemed frivolous and unnecessary in 1900. We have dog walkers, sleep trainers, virtual girlfriends, diversity consultants, lactation consultants, and pet psychologists. There are many more professions to invent, and they will only be invented if more people experiment.

If Rattner were right, the economy would have collapsed a long time ago, and we would all have been much poorer than our grandparents. So many of us spend our time doing things that would have seemed completely useless to a farmer or factory manager. We push paper, we come up with ideas, and we figure out how to convince other people to buy one laundry detergent over another. Etc. Etc.

We are able and required to spend our time in this manner because this is what the economy needs us to do. As I mentioned last week, Henry Ford was quick to realize that if his employees worked too hard, they wouldn't have time to buy cars:

"It is high time to rid ourselves of the notion that leisure for workmen is either lost time or a class privilege... People who have more leisure must have more clothes, they eat a greater variety of food... They require more transportation in vehicles."

The economy can't function and grow if people aren't given enough time to consume. The balance between production and consumption has been shifting for a long time. Implicitly, many or possibly most of us have already been spending most of our time consuming rather than producing. In the near future, this arrangement might become more explicit.

To understand how and why, let's return to AI.

Consumption is Production

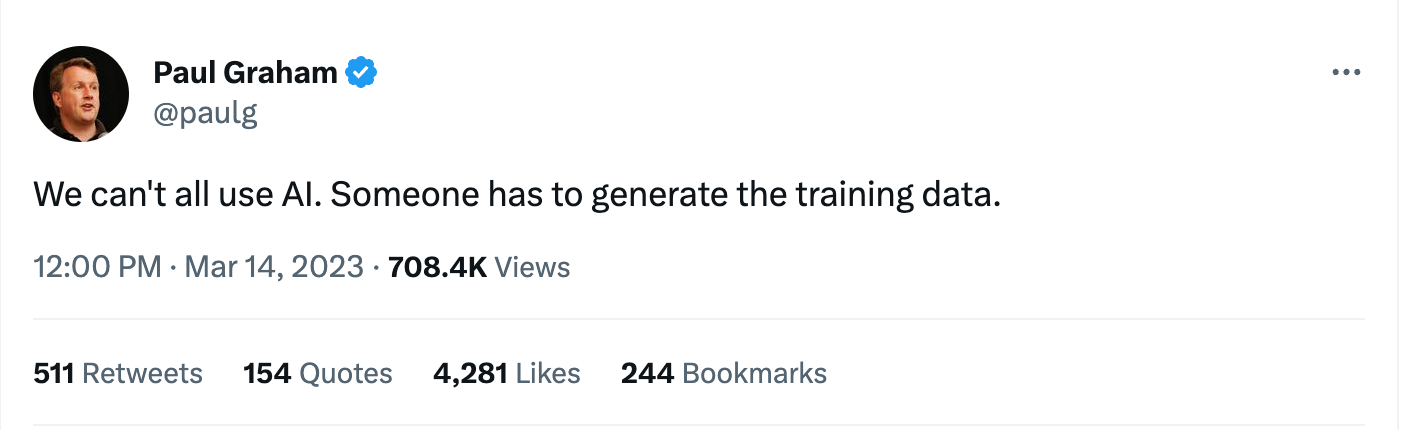

Last week, Paul Graham caught a lot of flak for the following tweet:

Paul was referring to the fact that the large language model that powers ChatGPT relies on processing vast quantities of content. It needs to find patterns in human-generated content to "guess" how to speak like a human. If everyone uses AI to generate content, AI will run out of human content to learn from.

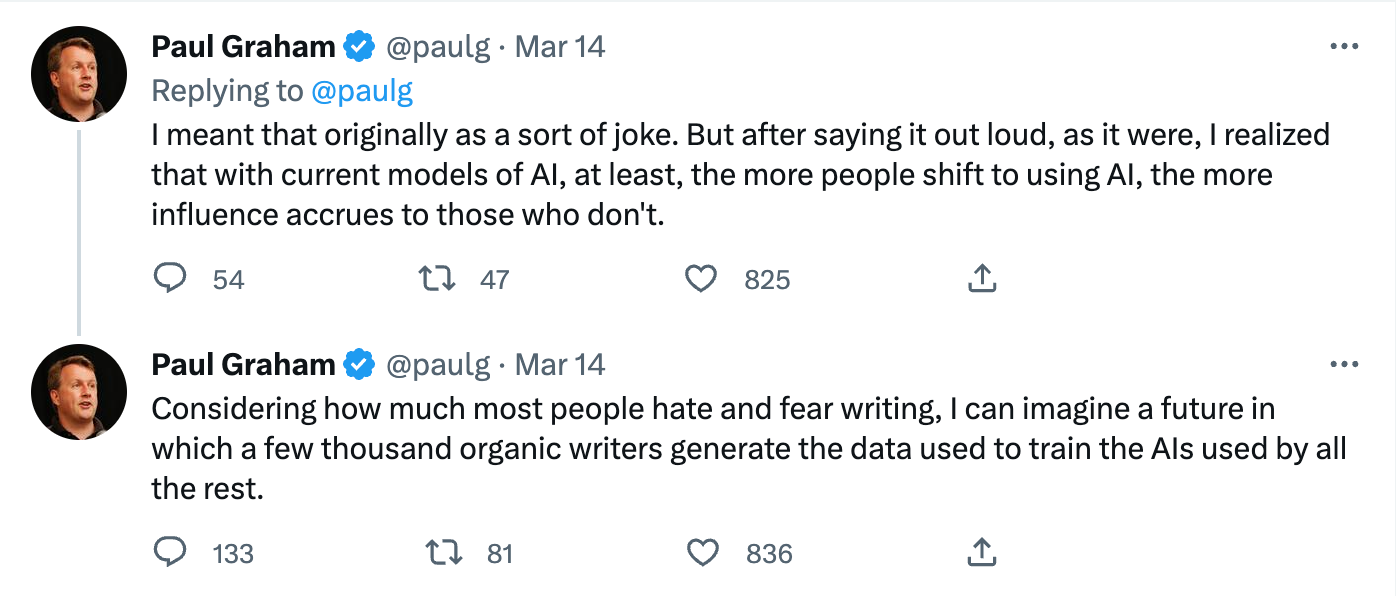

Paul, a successful inventor, entrepreneur, and venture investor, was criticized for implying that AI will serve a small elite and require everyone else to generate "fodder" for machines to learn more. But Paul meant the opposite. He later expanded on the original tweet:

Let's highlight the two most important sentences: "the more people shift to using AI, the more influence accrues to those who don't" and "a few thousand organic writers generate the data used to train the AIs used by all the rest." In the last sentence, you can replace "writers" with "doctors," "physicists," or "gym trainers."

In other words, AI enables a relatively small group of people to leverage their knowledge and serve millions or billions of others. And not just "serve" but actually enable other people to leverage elite expertise and sell it to their own customers. This is already possible with writing: ChatGPT enables anyone to write about almost any topic or to turn their own original thoughts into a coherent piece of writing in the style of anyone they choose. It also enables people to "mine" the insights of experts who have written extensively about a topic.

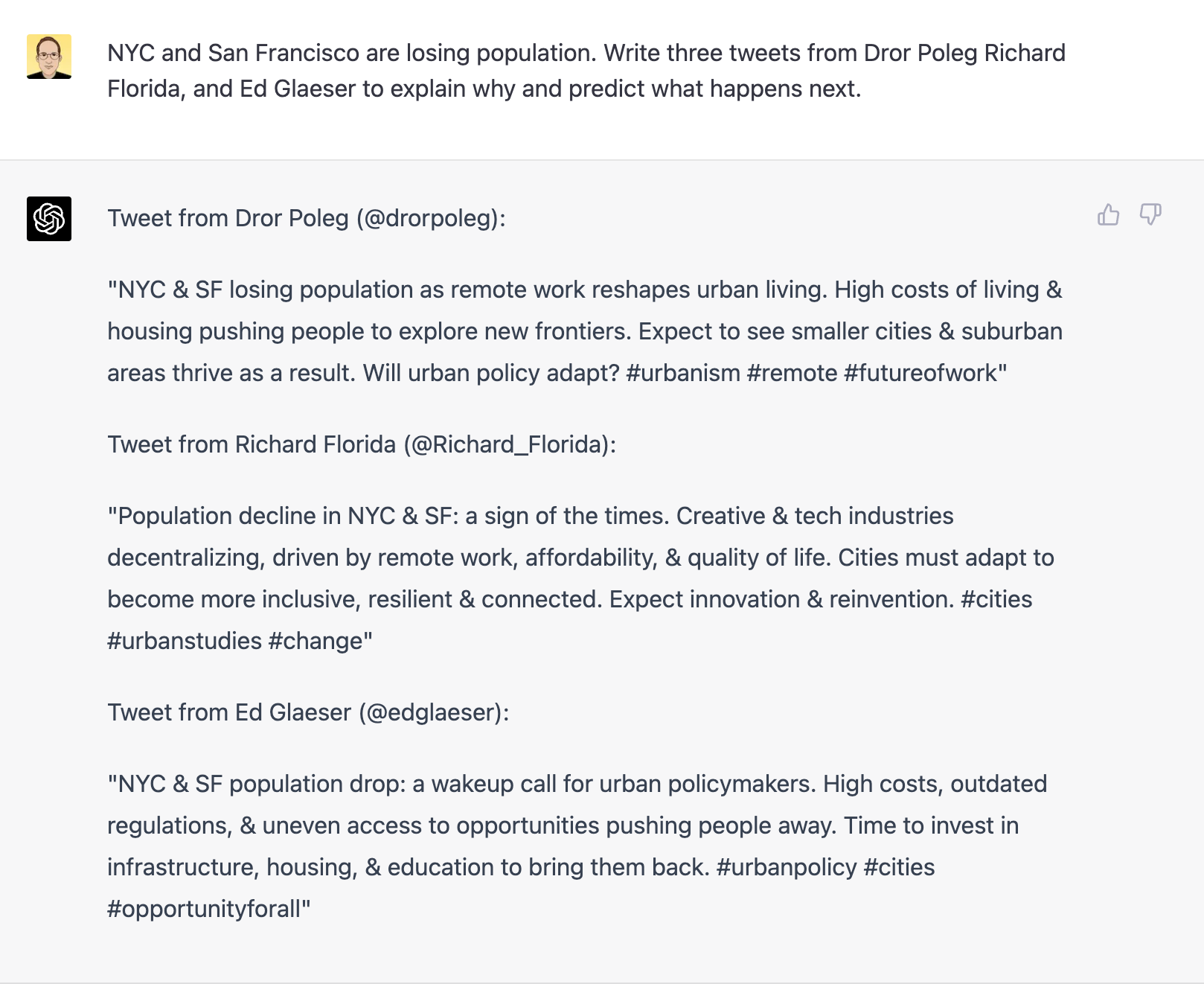

Consider the following interaction with ChatGPT:

Because Richard, Ed, and I have written publicly about related topics, it can surmise what we'll say about a specific news item. Models that are trained in a more specialized manner can do an even better job.

And in many fields, expertise is much more specific and applicable than in my field. Meaning people can use AI to draw insights that are specific and monetizable.

At the moment, people can "mine" other people's ideas and make money from them. No business model or copyright system is in place to enable experts to benefit from this or get royalties each time their ideas are used. Still, such systems will undoubtedly emerge in the coming years.

Still, this scenario assumes that most people will still work, even if they'll have a "lighter load" by relying on the insights of a narrow group of experts. In reality, if expertise can truly become so scalable, most people will not have to work at all. In many or most cases, you'll be able to get expert answers directly from the machine.

What will everyone do then? Consume. But consumption will serve a critical economic function: It will not just buy the stuff that people make; it will help make that stuff better and figure out what to make more of.

Most of the stuff we consume is not necessary. More precisely, it does not provide objective value. When I buy a pair of Nikes instead of Adidas, I buy them because, subjectively, I like one pair more than the other. And when I buy hiking shoes, I do not buy them due to a technical need to go hiking but because I think they'd look cool on my walk to the coffee shop.

In a few fields, the objective value actually matters. When I ask a doctor which drug to take, there is often only one correct answer. But for most consumer services and goods, the answer could be anything. The only way to know the answer is to test a variety of answers and see what people seem to like. In such an economy, the central role of most people is to express their preferences. This enables the economy to figure out what to make more of. Fewer people are required for the actual production, but everyone is important.

But there's also another option. Some people might no longer be economically necessary. They will not produce the stuff that everyone buys, and they will not consume too much, either. Instead, they'll enjoy the material improvement of primary conditions (better housing, cheaper energy, clean air, fast internet) and spend their time on whatever they're interested in — hanging out with each other and reading books.

Would that be sustainable? Can an economy "carry" a large group of people who don't work, hardly consume, and just read books and hang out with each other? Further, can this "poor by choice" group be truly happy and not overthrow the government? Will they not get "radicalized" by seeing others get richer and live in luxury?

I don't know. But strangely enough, something like this is already happening in one of the most advanced economies on earth.

Students for Life

Israel has one of the world's most successful technology ecosystems. It has higher GDP per capita than Finland, Germany, and the UK. It attracts more venture capital per capita than any other country other than Singapore.

And yet, Israel is home to a significant minority that avoids acquiring skills that are considered economically useful (math, science, geography) and participates in the overall economy at relatively low rates. I am talking about Israel's ultra-orthodox minority, about 13% of the population.

In Israel, ultra-orthodox Jews are exempted from military and national service; instead, they are allowed and incentivized to pursue biblical studies. This arrangement was put in place after the Holocaust in order to preserve and revive a world of Jewish learning that was decimated by the Nazis and their collaborators. The original idea was to exempt and sponsor a few hundred scholars. But over time, the number ballooned to around 150,000 men and hundreds of thousands of dependants.

In any case, Israel's secular majority has been trying to cancel this policy and force the ultra-orthodox minority to "contribute their fair share" to the economy. The argument is that the current arrangement is unfair and unsustainable, mainly because the ultra-orthodox minority is growing and is projected to comprise a third of the population within a few decades.

But what if it is sustainable?

I am not here to argue about Israeli politics or to express my personal views. But I find this situation fascinating from a theoretical perspective. Based on everything we discussed above, advanced economies may actually be able to sustain a large population of people who aren't doing "productive work."

Ironically, the more significant challenge for advanced economies might be completely different: How to appease the masses of people who aren't "useful"? Specifically, how to prevent millions of people from getting angry at the huge gaps between their income and the income of the more-productive minority of tech entrepreneurs and investors?

Israel seems to offer an answer to these questions. The country's ultra-orthodox (or, at least, the people they elect to represent them) seem happy with the current state of affairs. They don't get angry when other people get rich, buy yachts, or fly on fancy vacations. All they want is to live their lives and study Torah.

Karl Marx famously described religion as the “opiate of the masses.” He saw it as an ideological tool that legitimated the interest of the dominant wealthy class. Strangely, in Israel, the wealthy class is trying to convince religious people to throw faith aside and join the economy. Still, it seems like religion is "helping" a large group of people remain at peace with their economic condition and not revolt against growing income inequality.

There's a lesson in there. I'm not saying we should convert everyone to Judaism. But it looks like we'll need some new religions to emerge in order to justify the strange world we're headed for. Most people no longer need to work. Our survival depends on convincing them it's ok to do something else — and on developing systems that enable them to leave with dignity. May God help us all!

Have an excellent weekend,